Today we are going to talk about a very interesting topic which is How to write a prompt in ChatGPT or Bard or any Large Language Model(LLM) so that you can get the best of those.

A prompt is the “Art of asking questions in the right way”. In the real world if we need better bonding with anyone We need to ask better questions and it is considered part of communication skills.

Let us take an analogy to understand it more clearly. Suppose you have an anxiety problem and you go to a doctor for consultancy. the conversation looks something like this.

You: “I have an anxiety problem”;

Doctor: “Can you explain it in more detail like when you get it and what triggers it ?”;

You: “When I go to the stage and have to say something, I get shortness of breath, short of words, shaking hands and a quavering voice“;

Now you have explained it clearly, like “What is the problem, in what context it occurs and what are the symptoms“.

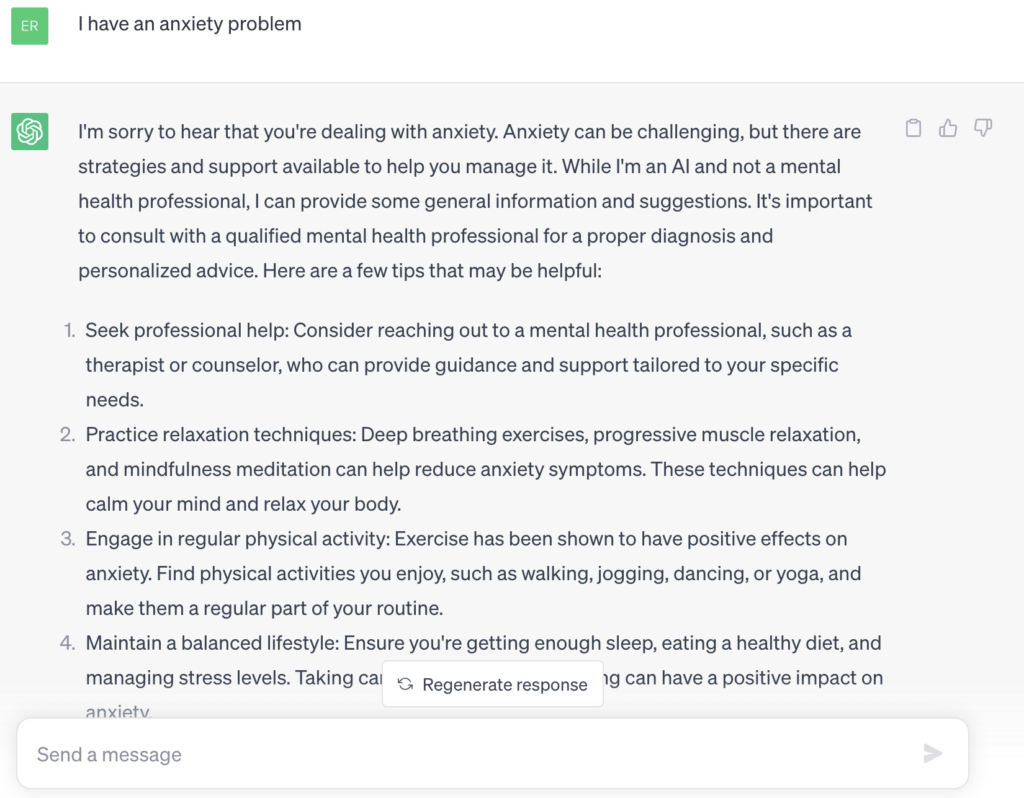

ChatGPT, Bard, and similar platforms expect better questioning from you If rather than visiting a doctor you decided to visit chat.openai.com and asked chatGPT the conversation happens something like this

When the prompt is not clearly written, ChatGPT gives general answers, not specific ones to the problem.

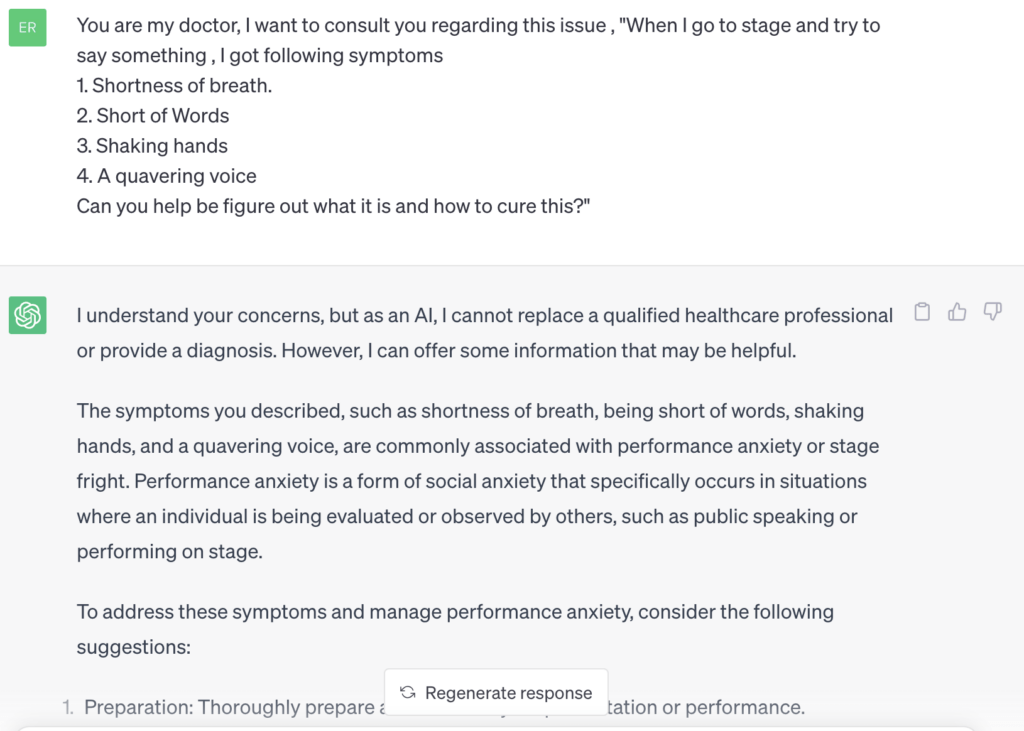

Now see the next image and see when the prompt is made clear the response is more clear and concise and specific to the problem.

For the sake of brevity, I have not pasted the whole response you may copy prompt given below the prompt and see the results yourself.

You are my doctor, I want to consult you regarding this issue , “When I go to stage and try to say something , I got following symptoms 1. Shortness of breath.

2. Short of Words

3. Shaking hands

4. A quavering voice

Can you help be figure out what it is and how to cure this?

Now let us take another example , let us take two variations of prompts

a) Write 10 ways to make money using ChatGPT.

b) Write {count} tips to make money using {source}

count=10

source=ChatGPT

At first look, option a) looks simple but by using b) and just changing count and source we can create as many prompts as possible easily, this is called Templating. It works as a blueprint or mold that can produce prompts by making small input changes. In the programming world, these two inputs (count and source) which can be changed are called input variables.

from langchain import PromptTemplate

from langchain.chains import LLMChain

from langchain.chat_models import ChatOpenAI

import os

user_prompt_template = """

Write {count} tips to make money using {source}

"""

prompt_template = PromptTemplate(input_variables=["count", "source"],

template=user_prompt_template)

llm = ChatOpenAI(

openai_api_key=os.environ["OPEN_API_KEY"], temperature=0.8, model="gpt-3.5-turbo", max_tokens=1024)

llm_chain = LLMChain(llm=llm, prompt=prompt_template)

print(llm_chain.run(count=2, source="Digital Marketing"))